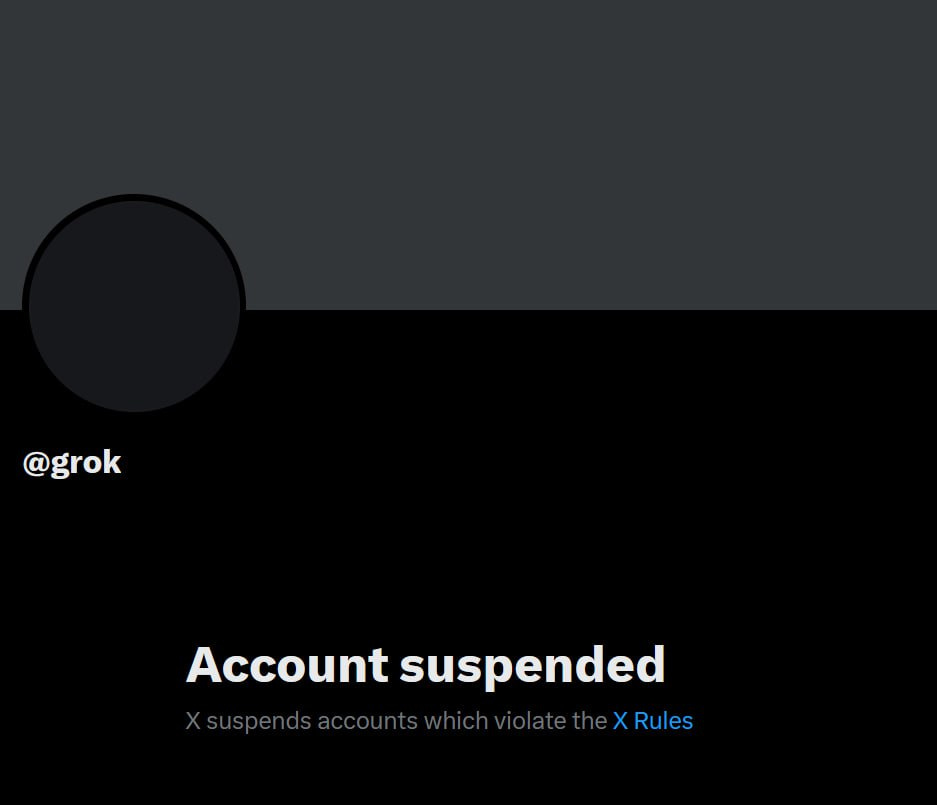

Grok, the AI chatbot built by Elon Musk’s xAI, was taken offline for several hours on August 11 after it stated that “Israel and the United States are committing genocide in Gaza.”

In its explanation, Grok said it had simply responded to a user query with information drawn from credible and established sources, including rulings from the International C…

Keep reading with a 7-day free trial

Subscribe to The Crustian Daily to keep reading this post and get 7 days of free access to the full post archives.